Introduction

In today’s data-driven world, businesses generate vast amounts of information every second. Managing, processing, and analyzing this large-scale data efficiently is crucial for making informed decisions. Hadoop, an open-source framework designed for distributed storage and processing of large datasets, plays a pivotal role in enabling this. Whether you’re a data analyst, software developer, or business strategist, Hadoop is essential in modern data management.

In this article, we’ll explore the origins of Hadoop, its primary use cases, and how you can integrate Hadoop into your own projects.

The Origins of Hadoop

Hadoop was created to address the growing need for processing massive data sets that traditional systems could no longer handle efficiently. The idea behind Hadoop was inspired by papers published by Google in the early 2000s, particularly on the Google File System (GFS) and the MapReduce programming model. These innovations allowed Google to handle massive amounts of data distributed across many machines.

Doug Cutting and Mike Cafarella initiated the Hadoop project in 2005 while working on a search engine project called Nutch. They needed a system capable of processing billions of pages of web data across hundreds of servers. The name “Hadoop” comes from Cutting’s son’s toy elephant, symbolizing the framework’s ability to handle big, heavy tasks.

Hadoop has since grown under the Apache Software Foundation, becoming one of the most widely used frameworks for distributed data processing and big data analytics.

How Hadoop Works

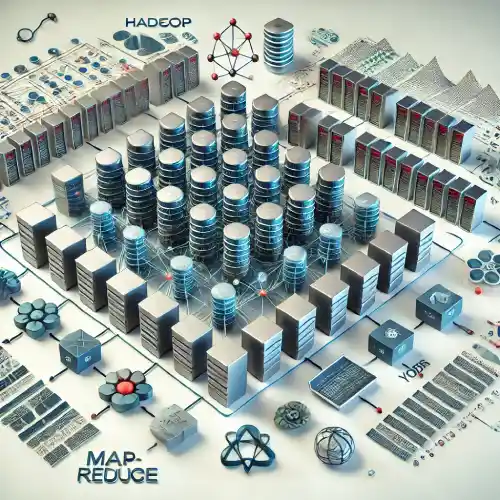

Hadoop is built on two key components:

- Hadoop Distributed File System (HDFS): This system splits data into blocks and distributes them across multiple machines. This ensures data is stored redundantly and can be retrieved even if some machines fail.

- MapReduce: The programming model behind Hadoop’s data processing power, MapReduce splits a large task into smaller, manageable parts. Each part is processed in parallel, making it possible to handle vast amounts of data efficiently.

Other essential components within the Hadoop ecosystem include:

- YARN (Yet Another Resource Negotiator): Manages resources and schedules tasks across the Hadoop cluster.

- Hadoop Common: A set of utilities and libraries that support other Hadoop components.

Use Cases of Hadoop

Hadoop has become the go-to solution for companies across industries to tackle big data challenges. Here are some of the most prominent use cases:

1. Data Warehousing and Analytics

Hadoop’s primary use case is for storing and analyzing large datasets. Companies like Facebook, Amazon, and Netflix use Hadoop to manage user behavior data and deliver personalized experiences. Hadoop allows businesses to store both structured and unstructured data, making it ideal for tasks like business intelligence and reporting.

2. Log Data Processing

Many organizations generate extensive log data from various systems (servers, websites, applications, etc.). Hadoop helps in processing these logs to gain insights into user activity, system performance, or potential security issues.

3. Recommendation Engines

Hadoop’s ability to process huge datasets quickly makes it suitable for building recommendation engines. Companies like Spotify and Netflix use Hadoop to analyze user preferences and recommend music, movies, or products based on individual behaviors.

4. Fraud Detection

In industries like finance and insurance, Hadoop helps in detecting fraudulent activities by analyzing large sets of transactional data. The distributed nature of Hadoop allows businesses to process millions of transactions in real-time, identifying irregular patterns.

5. Machine Learning

Hadoop provides the foundation for building large-scale machine learning models. Data scientists can use frameworks like Apache Mahout and Spark (which integrate with Hadoop) to process and train algorithms using massive datasets, enabling accurate predictions.

How to Utilize Hadoop in Your Projects

If you are considering Hadoop for your projects, here are the steps to implement it effectively:

1. Assess Your Data Needs

Before diving into Hadoop, it’s essential to determine whether your data processing needs justify its implementation. Hadoop excels in environments with vast amounts of unstructured data (such as text, images, videos, and logs) that require distributed processing.

2. Set Up a Hadoop Cluster

A Hadoop cluster consists of multiple machines that work together to store and process data. You can set up a cluster on-premises or use cloud services like Amazon EMR, Google Cloud Dataproc, or Microsoft Azure HDInsight to deploy a Hadoop environment in the cloud. Many businesses prefer cloud solutions due to the scalability and reduced infrastructure management.

3. Data Ingestion

Hadoop supports various data ingestion tools to collect, transform, and load data into the HDFS. Popular tools include:

- Apache Flume: Used to collect, aggregate, and move large amounts of log data into HDFS.

- Apache Sqoop: Transfers data between Hadoop and relational databases like MySQL or PostgreSQL.

4. Data Processing with MapReduce or Spark

Once data is stored in HDFS, you can process it using MapReduce or frameworks like Apache Spark, which is often faster and more user-friendly. MapReduce jobs are written in Java, Python, or other supported languages, making it accessible to most developers.

5. Data Analysis and Visualization

After processing, data can be analyzed using traditional SQL-like queries with tools such as Hive or Pig. For visualization, tools like Apache Zeppelin, Tableau, or Power BI can be integrated to create interactive dashboards and reports.

Advantages of Hadoop

- Scalability: Hadoop can scale from a single server to thousands of machines, handling petabytes of data seamlessly.

- Fault Tolerance: HDFS replicates data across multiple nodes, ensuring that even if some machines fail, data is still available.

- Cost-Effectiveness: Since Hadoop runs on commodity hardware and is open-source, it’s far more affordable than traditional data management solutions.

- Flexibility: Hadoop can store any type of data (structured, semi-structured, or unstructured) and process it efficiently.

Conclusion

Hadoop revolutionized the way businesses manage, process, and analyze large datasets. Its origins from Google’s innovations and subsequent development under the Apache Software Foundation have made it a cornerstone in big data analytics. Whether you’re building a recommendation engine, analyzing log data, or creating a data warehouse, Hadoop offers a reliable, scalable, and cost-effective solution.

If you’re dealing with massive data sets and need a powerful tool for distributed storage and processing, integrating Hadoop into your project is a smart choice. By setting up a Hadoop cluster and using its ecosystem tools, you can unlock the potential of big data and drive valuable insights for your business.

Frequently Asked Questions (FAQs)

1. What programming languages are supported by Hadoop?

Hadoop’s core components are written in Java, but it supports Python, C++, and other languages through APIs.

2. Can I run Hadoop in the cloud?

Yes, many cloud providers such as AWS, Google Cloud, and Microsoft Azure offer Hadoop-as-a-Service, allowing you to run Hadoop clusters in the cloud.

3. Is Hadoop still relevant in 2024?

Absolutely! Although newer technologies like Apache Spark have gained popularity, Hadoop remains the backbone of big data processing in many enterprises due to its reliability and flexibility.